The tasks AI agents can do are getting longer and longer. What are the consequences for society?

METR report

Language models like OpenAI's GPT-4o are used by millions of people to answer questions. But language models have long struggled to do things. OpenAI's GPT-4o can answer questions about quantum mechanics, but it cannot send an email with any degree of reliability.

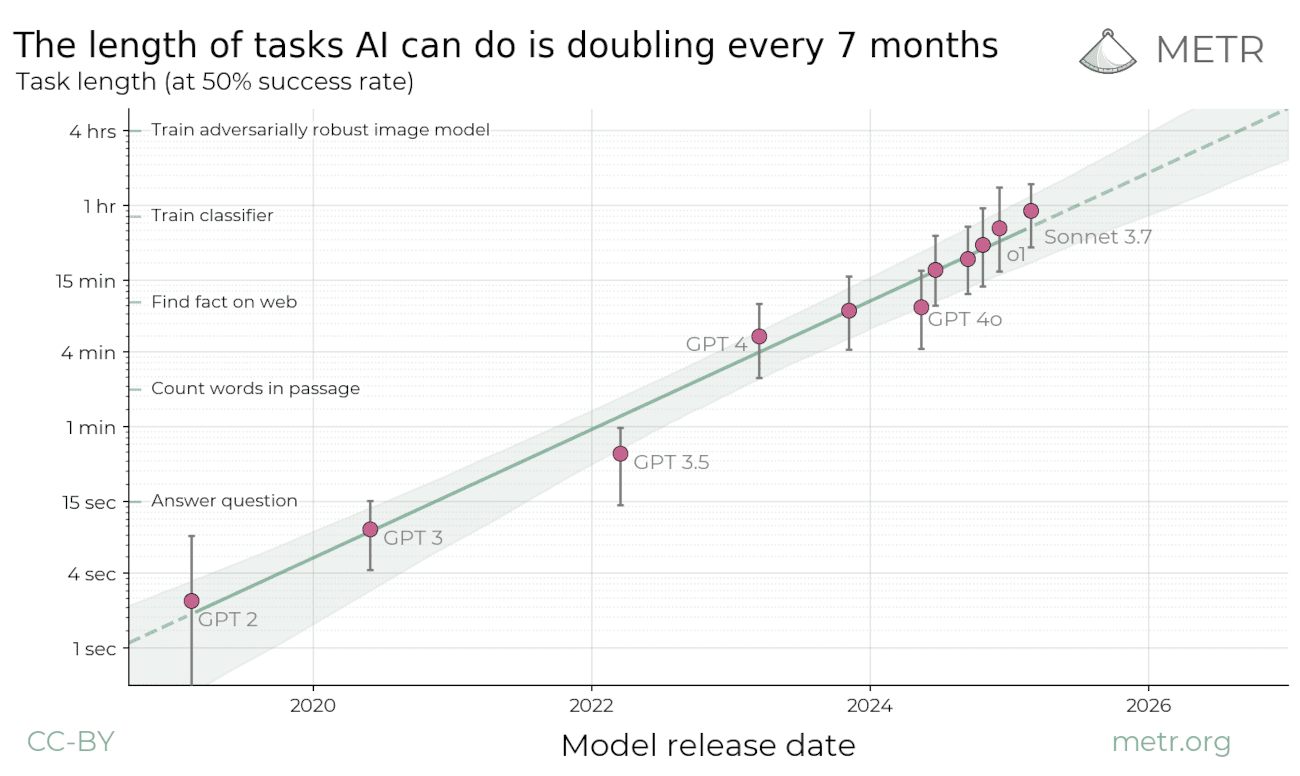

Just over two months ago, the research center METR published a report suggesting that this assumption will likely not hold true for much longer. METR's report shows that the length of tasks AI agents can perform doubles approximately every seven months.

If one extrapolates the trend from METR, it is found that AI agents will be competitive with humans on tasks lasting up to a month by 2030.

Consequences

If this trend continues, it will have enormous consequences. AI models that only answer questions can be very useful, but they are relatively safe. AI agents that can plan and execute goals autonomously entail much greater risks. Consider cyberattacks: Advanced cyberattacks are currently beyond the capabilities of AI models, but if the trends hold, this will quickly change. When models have the ability to carry out such attacks continuously and without human supervision, the risk level of AI systems will become much higher.

And this, in the grand scheme of things, is not the greatest danger with advanced autonomous AI systems. The ability to perform complex tasks over weeks or months, as METR's projections suggest for the end of the decade, opens the door for agents that can develop and implement long-term strategies beyond human control. Such systems, if their goals are not perfectly aligned with ours, could lead to large-scale accidents, manipulate societal institutions, or develop dangerous technologies in ways we cannot foresee or prevent.